Insights Index

ToggleFrom Prompts to Context: The New Architecture Behind Reliable AI

By Prady K | Published on DataGuy.in

Introduction: Moving Beyond Prompts

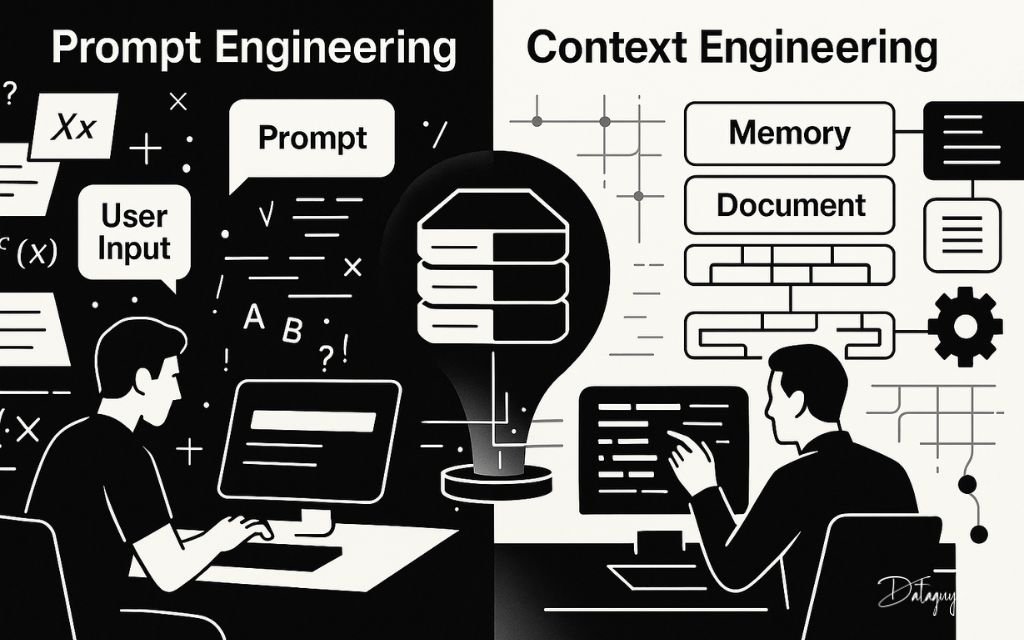

In the early days of working with large language models (LLMs), prompt engineering was the talk of the town. Clever phrasing, chained instructions, few-shot learning examples — it all felt like magic. But as models grew more capable, a new challenge emerged: prompt tuning alone wasn’t enough.

Today, we stand at the edge of a shift — from manipulating prompts to designing context. Welcome to the era of Context Engineering, where the real power lies not in the prompt itself, but in the world that surrounds it.

Step 1: Understand the Difference

What is Prompt Engineering?

Prompt Engineering is the art of crafting the exact sequence of words that guide an LLM’s behavior. It includes instructions, examples, and formatting — all carefully curated in the input prompt.

What is Context Engineering?

Context Engineering, on the other hand, is the broader discipline of shaping the entire input environment that an LLM uses to reason, generate, or decide. It includes prompts, but goes far beyond — incorporating memory, retrieval systems, dynamic data feeds, roles, metadata, and even pre-conditioning strategies.

Step 2: Think in Terms of Systems, Not Strings

Context engineering treats the LLM not as a tool to be “tricked” with clever inputs, but as a reasoning engine embedded within a larger system. You’re not writing one-off instructions — you’re architecting how knowledge, tasks, history, and identity flow into the model.

This is especially important for agentic use cases, long-running conversations, or multi-modal workflows, where relying on a static prompt falls short.

Step 3: Break Down the Core Building Blocks of Context

Here are the major elements a context engineer actively manages:

- Prompt Template: Instruction format, input/output schemas, guiding examples

- System Message: Role and behavioral instructions set at the start

- Memory: What the model remembers about past interactions

- Retrieval: External documents and vectors dynamically injected

- Metadata: User ID, time, location, domain — all can shape output

- Preloading: Injecting latent intent or topics before the actual user query

A prompt engineer edits lines. A context engineer designs orchestration.

Step 4: Use the Right Tool for the Right Job

Prompt engineering shines in constrained tasks — like writing a poem, summarizing an article, or giving structured output. But when you’re building:

- A personal assistant that remembers your style

- An AI agent that autonomously executes tasks across steps

- A chatbot that adapts to dynamic knowledge bases

- A co-pilot that customizes its behavior to user persona

…then prompt design alone collapses. You need architecture. You need context engineering.

Step 5: Understand How It Affects Output Quality

A prompt is limited by what you can squeeze into a few hundred or thousand tokens. Context engineering, however, leverages tooling like:

- RAG (Retrieval-Augmented Generation): Injecting live documents

- Session memory: Capturing preferences, history, goals

- Agent state: Enabling reflection and planning across steps

- Fine-grained control: Modulating temperature, tool usage, API actions

This means responses are not just relevant — they’re adaptive, persistent, and personalized.

Step 6: Apply the Right Mindset

Don’t think like a writer; think like an architect. As a context engineer, you’re not crafting a one-liner — you’re constructing a scaffolding around the model’s reasoning space.

You influence what the model knows, remembers, forgets, and prioritizes — not by giving it one big prompt, but by feeding it the right experience over time.

Side-by-Side: Prompt vs Context Engineering

| Aspect | Prompt Engineering | Context Engineering |

|---|---|---|

| Focus | Static string of instructions | Dynamic orchestration of inputs |

| Scope | Prompt-only | System messages, memory, retrieval, persona, state |

| Tools | LLM playground, API prompt injection | RAG, memory systems, tool use, agentic loop control |

| Use Cases | Single-shot tasks | Multi-turn agents, copilots, workflows, personalization |

| Examples | “Summarize this article in 3 points” | “Remember the user’s preferences across sessions” |

Origin of the Term: Context Engineering

Andrej Karpathy, a leading voice in AI, popularized the concept by distinguishing it from prompt engineering. His insights captured the emerging reality of building LLM applications with precision and depth:

“Context engineering is the delicate art and science of filling the context window with just the right information for the next step.”

— Andrej Karpathy

Conclusion: This Isn’t Prompt Hacking. It’s Interface Design for Intelligence.

Context Engineering isn’t a replacement for prompt engineering — it’s the next level. Think of it as the UX layer for LLM-based intelligence systems. Instead of just telling the model what to do, you build the space within which it thinks.

In the coming years, the most powerful AI systems won’t be the ones with the best prompts — they’ll be the ones with the most elegantly engineered context.