Insights Index

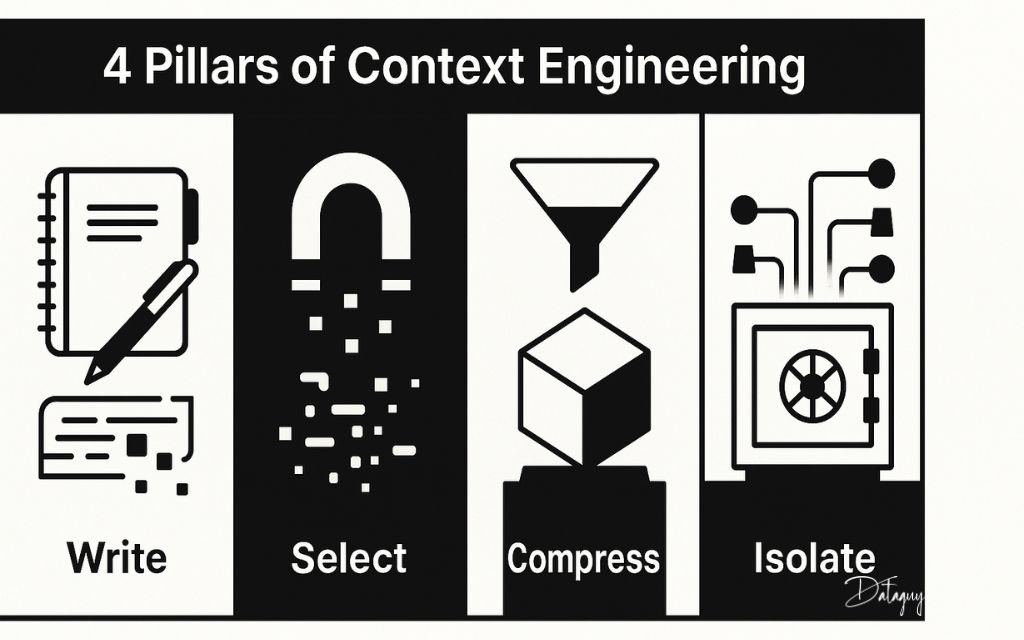

ToggleThe 4 Pillars of Context Engineering – Write, Select, Compress, Isolate

By Prady K | Published on DataGuy.in

Introduction: How AI Systems Stay Contextually Sharp

If you’ve ever wondered how AI systems manage vast amounts of information without losing relevance or falling into confusion, welcome to the core mechanics of Context Engineering.

In this post, we dive deep into the four essential strategies—Write, Select, Compress, and Isolate—that help AI models operate smarter, faster, and more reliably. Let’s walk through each pillar with real-world examples and practical metaphors, so you can understand how intelligent systems maintain meaning at scale.

Step 1: WRITE — Memory That Outlasts a Prompt

AI systems don’t have memory in the traditional sense unless we give them one. The Write strategy is about capturing and storing information beyond the immediate session. Think of it as taking organized notes for long-term use.

Techniques Used:

- Scratchpads and persistent notes

- User profile storage

- External memory/state objects

Where It Shows Up:

- A coding assistant writing pseudocode to a scratchpad to resume later

- A tutoring bot remembering your past mistakes to personalize future lessons

- A customer service agent logging ongoing issues outside the chat thread

- A legal agent saving past case summaries for retrieval

Write is the foundation of long-term memory in AI workflows.

Step 2: SELECT — Smart Retrieval, Not Blind Recall

Just because you stored it doesn’t mean it all belongs in the prompt. Select strategies prioritize and retrieve the most relevant context from available memory. This avoids flooding the model with too much noise.

Techniques Used:

- Semantic search

- Context prioritization

- Retrieval-Augmented Generation (RAG)

Examples in Action:

- A medical bot pulls only the latest lab results, ignoring older ones

- A support chatbot fetches just the last 3 unresolved tickets

- A travel planner brings in visa and weather info for only the active trip

Select ensures the model sees what matters — and only that.

Step 3: COMPRESS — Trim the Fat, Keep the Meaning

Token space is precious. Compress strategies aim to reduce the size of the prompt input without sacrificing essential information. It’s not just about shortening—it’s about intelligent compression.

Key Techniques:

- Recursive summarization

- Fact extraction

- Redundancy pruning

Practical Examples:

- An email assistant summarizes threads and extracts action items

- A trading bot condenses market data into high-level insights

- A research tool collapses entire papers into bullet-pointed highlights

Compress is how we fit a library of thought into a single prompt window.

Step 4: ISOLATE — Avoid Cross-Talk and Cognitive Collision

Context collisions are real. When an AI model processes multiple roles, agents, or domains in the same session, information bleed can lead to confusion. Isolate ensures separation of context streams.

How It Works:

- Sub-agent context slots

- Sandboxed tools

- Topic-specific partitions

Real-World Use Cases:

- A multi-agent research system gives each agent its own isolated data

- PII data in HR bots is handled in a private context away from general logic

- A code execution agent stores tool outputs separately from prompts

Isolate protects integrity by separating streams of thought.

Side-by-Side: 4 Pillars at a Glance

| Pillar | Purpose | Example |

|---|---|---|

| Write | Store important info for later use | Coding bot logs pseudocode |

| Select | Fetch only relevant data | Support bot fetches recent tickets |

| Compress | Summarize and reduce token load | Inbox assistant trims threads |

| Isolate | Partition context by topic, role, or agent | Multi-agent systems maintain clean boundaries |

Metaphor: Think Like a Student, Design Like a System

Write is like taking classroom notes, Select is reviewing only tonight’s homework, Compress is making a summary sheet for exams, and Isolate is organizing your notes by subject so nothing gets mixed up.

Conclusion: Why These 4 Pillars Matter

Together, Write, Select, Compress, and Isolate form the backbone of context engineering. They enable:

- Scalable memory beyond a single prompt

- Relevance over redundancy

- Speed without sacrifice

- Workflow orchestration without chaos

Whether you’re building AI agents, designing LLM pipelines, or optimizing prompt-based systems—mastering these four pillars helps your system act not just intelligently, but intentionally.