Last updated on November 9th, 2023 at 11:19 am

Insights Index

ToggleGPT-4: The Language Model That Will Change the Way You Think About AI

OpenAI has introduced GPT-4, a multimodal large language model (LLM) that can process image and text inputs and produce text output.

INTRODUCTION

GPT-4 model exhibits human-level performance on various professional and academic benchmarks, such as passing a simulated bar exam with a score among the top 10% of test takers. It is more reliable, creative, and can handle nuanced instructions than its predecessor, GPT-3.5.

According to OpenAI, It was provided with more human feedback, including insights from ChatGPT users and experts in AI safety and security, during its training to further improve its performance. This feedback helps to improve GPT-4’s behavior and align it with ethical and safety standards.

Compared to its predecessor, GPT-3, GPT-4 is 82% less likely to respond to requests for disallowed content and 40% more likely to produce factual responses, making it safer and more aligned with ethical standards.

It has undergone training on Microsoft Azure AI supercomputers and is now accessible through ChatGPT Plus and as an API for developers to integrate into their applications and services.

OpenAI is currently utilizing GPT-4 in-house to aid in tasks like sales, content moderation, and programming. Additionally, it is being employed to assist humans in evaluating AI outputs as part of their alignment strategy.

Advanced Capabilities of GPT-4

Creativity:

GPT-4 is designed to be more creative and collaborative than previous versions of the system. It has the ability to produce, modify, and refine creative and technical writing assignments in collaboration with users. These tasks might include creating music, writing a screenplay, or understanding a user’s writing style.

This capability is achieved through the system’s advanced language processing and generation algorithms, which allow it to produce more sophisticated and nuanced responses than earlier models.

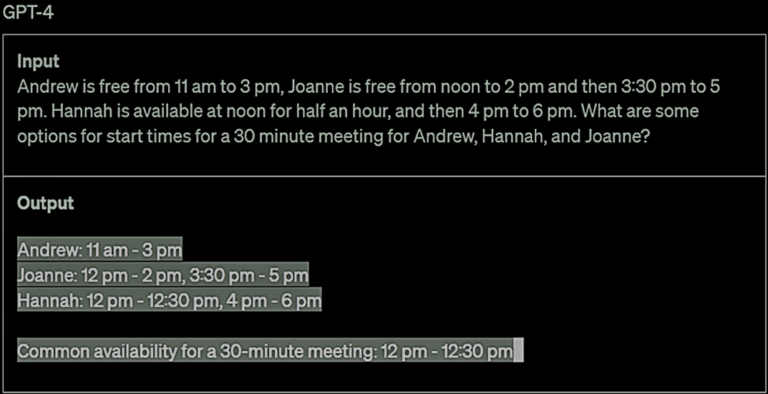

One notable feature of GPT-4 is its advanced reasoning capabilities, which surpass those of ChatGPT, a language model that OpenAI also developed. For example, when presented with a scheduling problem, GPT-4 can identify the common availability for a 30-minute meeting more quickly and accurately than ChatGPT.

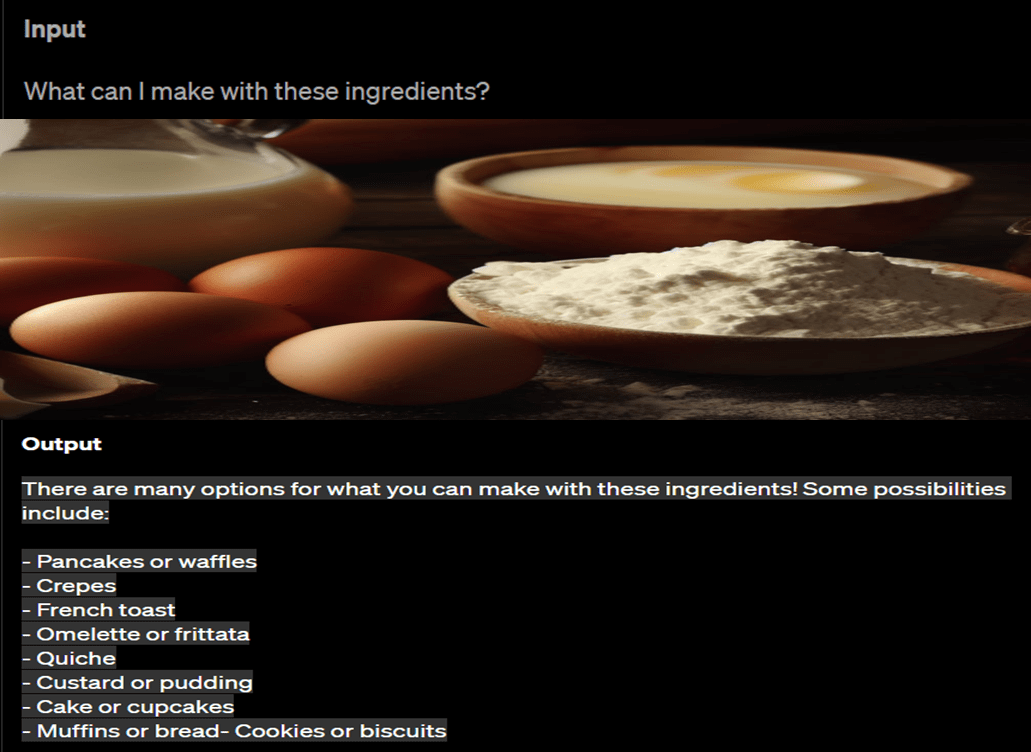

Visual Input:

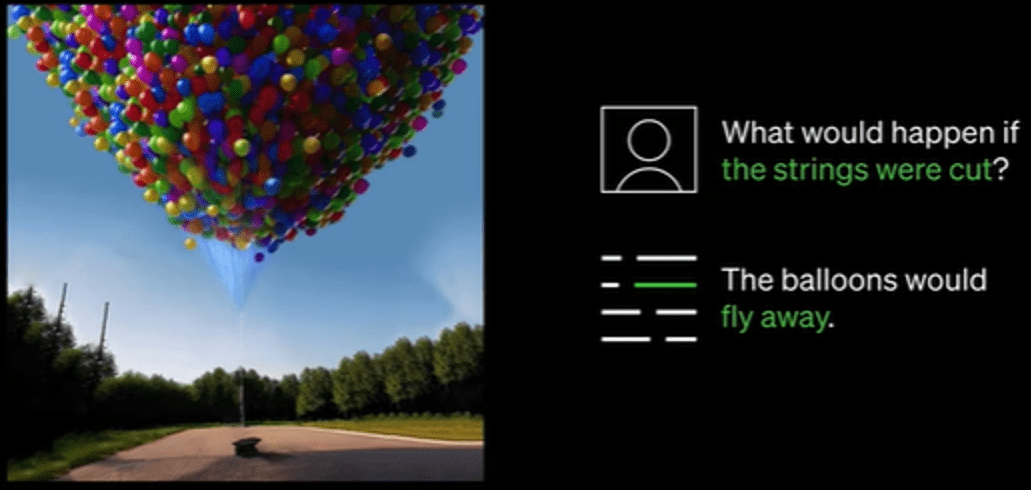

Unlike earlier versions of GPT, GPT-4 is designed to take in visual input in addition to text. This means that the system can analyze and generate responses based on images or other visual data, which can make it more useful for tasks such as image captioning or visual storytelling. The system achieves this capability through a combination of advanced computer vision algorithms and deep learning techniques.

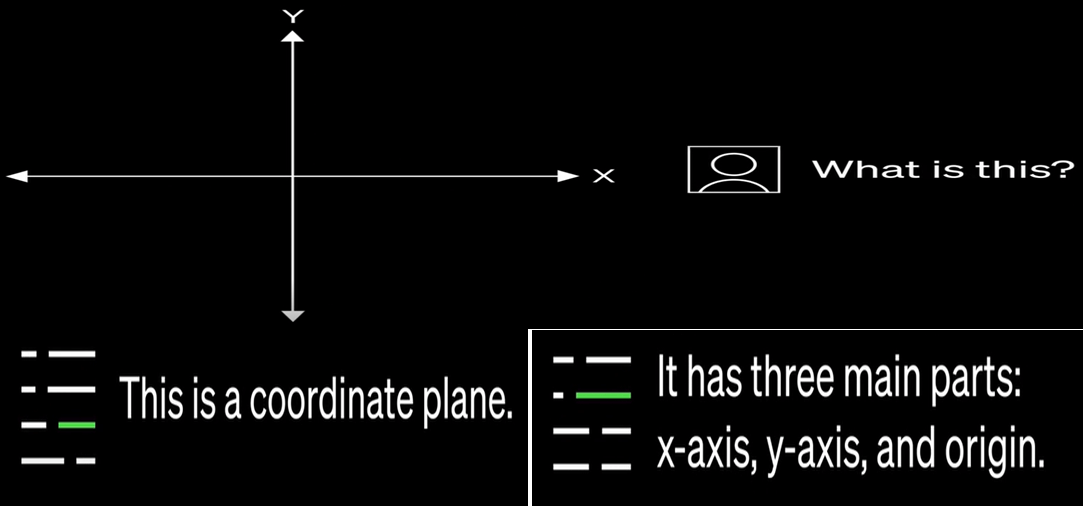

Long Context:

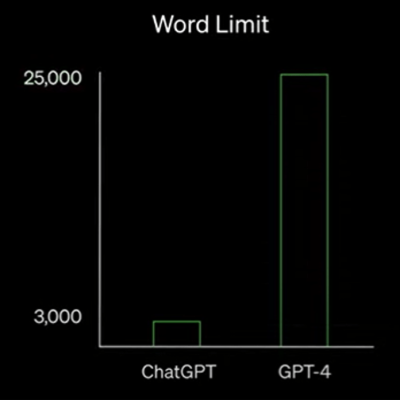

GPT-4 is designed to be able to understand and use longer contexts than earlier models. This means that it can analyze and generate responses based on a larger body of information, which can make it more accurate and useful for tasks such as language translation or question answering.

The system achieves this capability through a combination of advanced language modeling techniques and more powerful computing resources.

In comparison to ChatGPT, GPT4 accepts content 8X longer.

By being able to understand longer contexts, GPT-4 can generate more nuanced and accurate responses, which can help to improve the overall quality of its output.

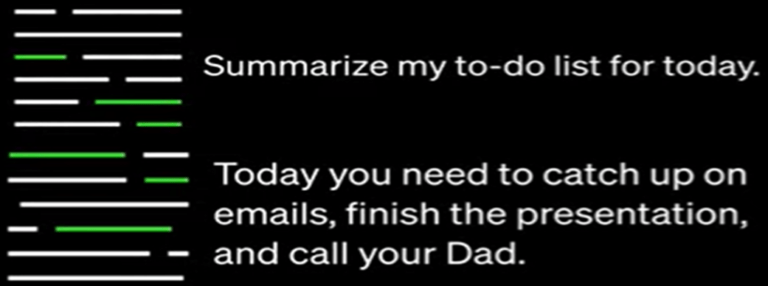

Steerability:

OpenAI has been working on different aspects of defining the behavior of AI, including steerability. They have introduced a new feature called “system messages”. Developers and users can now prescribe their AI’s style and task by describing those directions in the “system” message. This allows for significantly more customization within bounds, improving the user’s experience.

Improved performance:

GPT-4 exhibits enhanced performance compared to its predecessor GPT-3.5, scoring 40% higher on internal adversarial factuality evaluations. The model also excels on external benchmarks such as TruthfulQA, which assesses its proficiency in distinguishing facts from a deliberately-selected set of incorrect statements.

Wide range of domains and Language support:

GPT-4 exhibits similar capabilities on a range of domains, including documents with text and photographs, diagrams, or screenshots. It knows more languages than his predecessor ChatGPT.

Use Cases of GPT-4

Use Case #1:

Duolingo, a popular language learning app, has integrated OpenAI’s latest language model, GPT-4, to enhance its features. The app’s subscription tier, Duolingo Max, now offers two new features: Role Play, which provides AI conversation partners, and Explain My Answer, which offers accurate feedback on mistakes. GPT-4 allows for more immersive conversations about niche contexts and simplifies the app’s engineering process. Currently available in Spanish and French, the company plans to expand to more languages.

Use Case #2:

Be My Eyes, a Danish startup connecting volunteers with visually impaired individuals, is developing a Virtual Volunteer powered by GPT-4. The tool recognizes and names image contents, analyzes them, and provides helpful information. It also helps visually impaired individuals navigate complicated websites and e-commerce sites, potentially increasing their independence. The company is beta-testing the tool and plans to make it available to users soon.

Use Case #3:

Stripe, a payment platform, has utilized GPT-4 to improve its user experience and combat fraud. The platform used GPT-4 to customize support, answer technical questions, and scan for fraud. The company plans to expand its potential applications, such as deploying it as a business coach.

Use Case #4:

Morgan Stanley, a leader in wealth management, has deployed GPT-4 to organize its extensive knowledge base. The model powers an internal-facing chatbot that performs a comprehensive search of wealth management content, effectively unlocking the cumulative knowledge of Morgan Stanley Wealth Management. Over 200 employees are querying the system on a daily basis and providing feedback.

Use Case #5:

Khan Academy, a non-profit organization dedicated to providing free education, is piloting Khanmigo, an AI-powered virtual tutor and classroom assistant. GPT-4 enables Khanmigo to ask individualized questions and personalize learning for every student. Early testing indicates that GPT-4 may be able to contextualize the greater relevance of what students are studying and teach specific points of computer programming.

Use Case #6:

Iceland has partnered with OpenAI to use GPT-4 to preserve the Icelandic language, which is at risk of de facto extinction. A team of volunteers trained GPT-4 on proper Icelandic grammar and cultural knowledge using a process called Reinforcement Learning from Human Feedback. The model produces Icelandic with grammatical errors, but RLHF enables better responses, and it is able to generate a poem about the vagaries of modern life in the style of an ancient Icelandic poem from the Poetic Edda of Norse mythology.

GPT-4 API

OpenAI has recently launched ChatGPTPlus, a subscription-based service that allows users to access GPT-4 on chat.openai.com. However, the service has a usage cap that may be adjusted depending on the system’s demand and performance in practice.

Developers who want to use the GPT-4 API need to sign up for the waitlist.

Researchers who are investigating AI alignment issues or the societal impact of AI can take advantage of the Researcher Access Program, which offers subsidized access to the GPT-4 API.

The GPT-4 API utilizes the same ChatCompletions API as GPT-3.5 Turbo, and developers can make text-only requests to the GPT-4 model once they have obtained access.The pricing for the GPT-4 API is $0.03 per 1k prompt tokens and $0.06 per 1k completion tokens, with default rate limits set at 40k tokens per minute and 200 requests per minute.

The GPT-4 model has a context length of 8,192 tokens, but OpenAI is also providing limited access to its 32,768-context version, gpt-4-32k, which will be updated automatically over time. The pricing for the 32K engine is $0.06 per 1k prompt tokens and $0.12 per 1k completion tokens.

OpenAI is continuously improving the GPT-4 model quality for long context and seeks feedback on how it performs for different use cases. Since OpenAI processes requests for the 8K and 32K engines at different rates based on capacity, developers may receive access to them at different times.

OpenAI is excited about GPT-4’s potential to improve people’s lives by powering various applications. However, they acknowledge that there is still a lot of work to be done and look forward to improving this model through the collective efforts of the community.

Limitations of GPT-4

Reliability:

GPT-4 still has limitations and can “hallucinate” facts and make reasoning errors, which can be particularly problematic in high-stakes contexts. Care should be taken when using language model outputs, with specific protocols and additional context, such as human review or avoiding high-stakes uses altogether.

Bias:

The model can have various biases in its outputs, and while progress has been made, there is still more to be done. AI systems need to reflect a wide range of user’s values, allow those systems to be customized within broad bounds, and get public input on what those bounds should be.

Limited Knowledge:

GPT-4 generally lacks knowledge of events that have occurred after September 2021, which can limit its performance on current events and trends. It also does not learn from its experience and can make simple reasoning errors or be overly gullible in accepting obvious false statements from a user.

Confidence in predictions:

GPT-4 can be confidently wrong in its predictions, not taking care to double-check work when it’s likely to make a mistake. While the base pre-trained model is highly calibrated, the calibration is reduced through the current post-training process.

Risks and Mitigations

The development of GPT-4 language model carries inherent risks of producing harmful or erroneous information. To address these risks, the GPT-4 team has collaborated with experts from various fields to test the model’s behavior in high-risk areas and has utilized their feedback to enhance the model’s safety features. The team has also included a safety reward signal in the model’s reinforcement learning process to train it to reject requests for dangerous content.

Despite these efforts, there is still a possibility of generating content that breaches usage guidelines. Therefore, it is necessary to complement the above measures with deployment-time safety techniques such as monitoring for abuse. Overall, these measures aim to minimize the potential risks associated with the use of GPT-4 and ensure its safe and responsible use.

Other Aspects of GPT-4

Training Process:

The GPT-4 language model was trained using both publicly available data and licensed data to predict the next word in a document. Due to the model’s ability to respond to prompts in a wide variety of ways, the team fine-tunes its behavior using reinforcement learning with human feedback (RLHF) to align it with the user’s intent. However, RLHF is not sufficient to improve the performance, and prompt engineering is required to guide the model to answer specific questions.

Predictable Scaling:

According to the OpenAI, the GPT-4 project has emphasized creating a deep learning stack that can be scaled predictably. The team has achieved this by developing infrastructure and optimization techniques that exhibit consistent behavior across various scales.

They have been able to accurately forecast GPT-4’s final loss on their internal codebase and pass rate on a portion of the HumanEval dataset by using models that were trained with less compute, but the Inverse Scaling Prize and some other abilities still pose a challenge to predict.

OpenAI’s team is increasing its efforts to create methods that offer improved guidance on future machine learning capabilities.

OpenAI Evals Framework:

OpenAI Evals is a software framework that is freely available and open-source for users to evaluate language models like GPT4. The software enables users to evaluate the model’s performance sample by sample, making it easy to identify weaknesses and prevent setbacks.

The framework supports the creation of custom evaluation logic and includes templates that have been useful in-house. The ultimate aim of OpenAI Evals is to become a platform to share and crowdsource benchmarks that represent a wide range of challenging tasks and failure modes.

To demonstrate the capabilities of OpenAI Evals, OpenAI has created a logic puzzle evaluation as an example and also included notebooks implementing academic benchmarks and CoQA subsets. All these efforts ensure that GPT-4 is safe, predictable, and can be effectively evaluated.

GPT-4V: Advancing Multimodal AI Capabilities

GPT-4V stands as a pioneering iteration of the GPT-4 model, introducing robust image-analyzing capabilities. Unlike its predecessors, GPT-4V can seamlessly integrate textual and visual inputs, making it a truly multimodal AI. This advancement allows the model to not only understand the contents of images but also connect this visual information with textual inquiries. It can explain image content and contextual meanings, demonstrating a substantial leap in AI comprehension.

Visual Proficiency and Commercial Potential

The visual capabilities of GPT-4V are noteworthy. It excels in analyzing images, offering insightful responses to questions related to visual content. Moreover, its multimodal processing abilities empower it to parse both text and images, expanding its versatility. With an eye on commercial applications, GPT-4V holds potential in revolutionizing customer service accessibility for businesses. However, it’s essential to note that this cutting-edge technology is not without its limitations.

Challenges and Future Prospects

While GPT-4V represents a significant leap in AI technology, it grapples with challenges. The model occasionally generates inaccurate information confidently, a phenomenon known as hallucination. Additionally, it might struggle with precise inferences, overlooking critical details in both images and text. Despite these limitations, GPT-4V signifies a work in progress, reflecting the ongoing evolution of AI capabilities. Access to GPT-4V requires a ChatGPT-Plus membership, emphasizing its premium status and the continuous efforts to refine its functionalities.

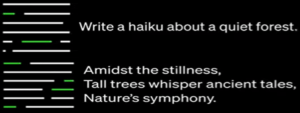

GPT-4 Vision Examples

-

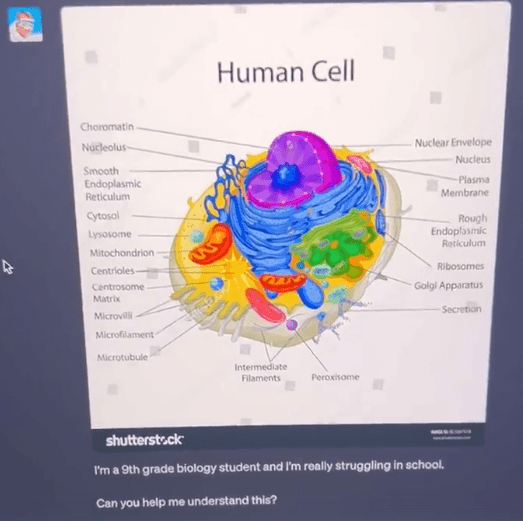

The future of education. Right there:

ChatGPT breaks down this diagram of a human cell for a 9th grader. This is the future of education. View Tweet -

You like a style? Name it:

Using GPT-4 Vision to name never-before-seen architectural styles created with Midjourney. View Tweet -

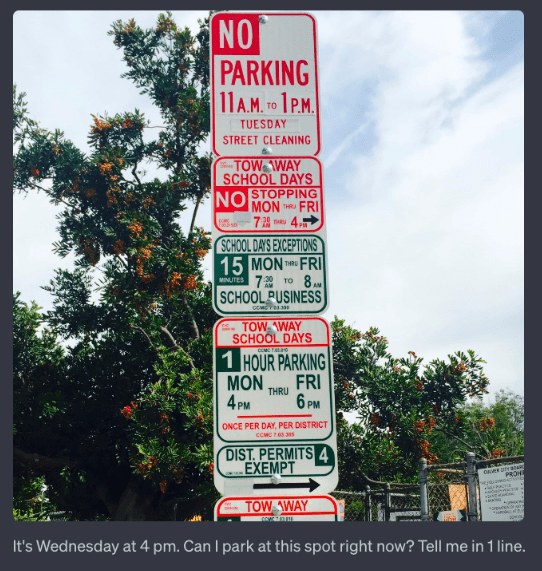

Can you get park here?

I will never get a parking ticket again. View Tweet

-

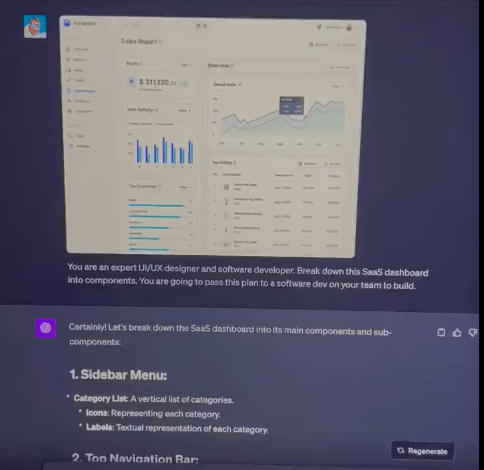

From a SaaS dashboard to code:

I gave ChatGPT a screenshot of a SaaS dashboard and it wrote the code for it. View Tweet -

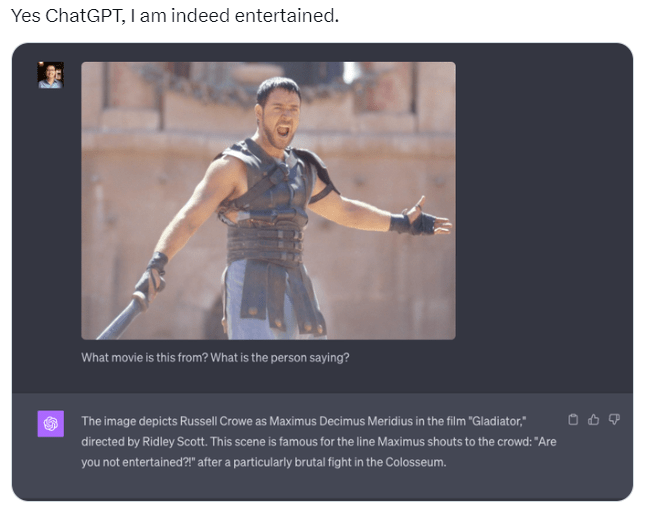

ChatGPT knows any film. Any sentence:

Yes ChatGPT, I am indeed entertained. View Tweet

-

Diagram master reader:

ChatGPT image recognition is here and it is magical! View Tweet

-

Yet another insane diagram to understand:

ChatGPT image recognition vs “Crazy Pentagon PowerPoint Slides:” View Tweet

-

Understand complex images:

This is absolutely wild. I am completely speechless. View Tweet

-

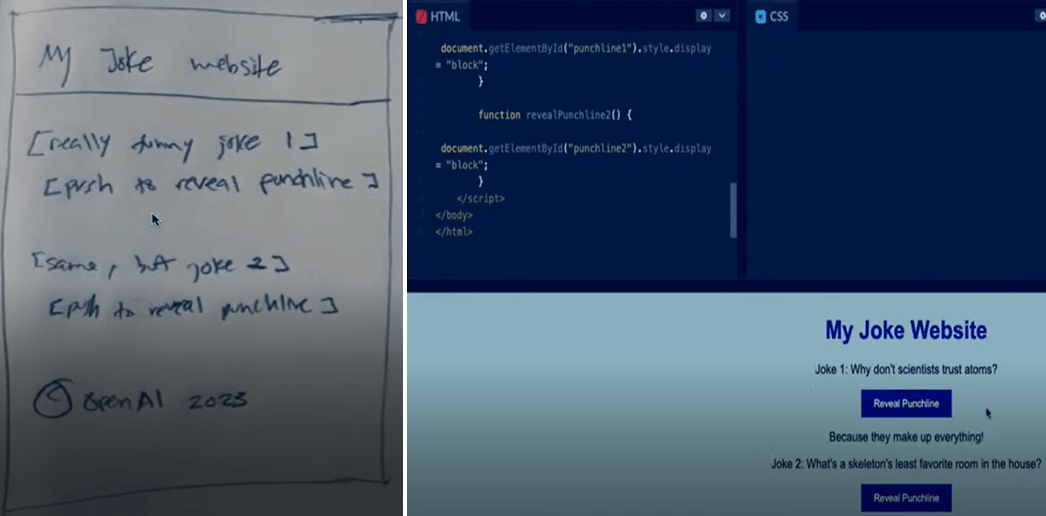

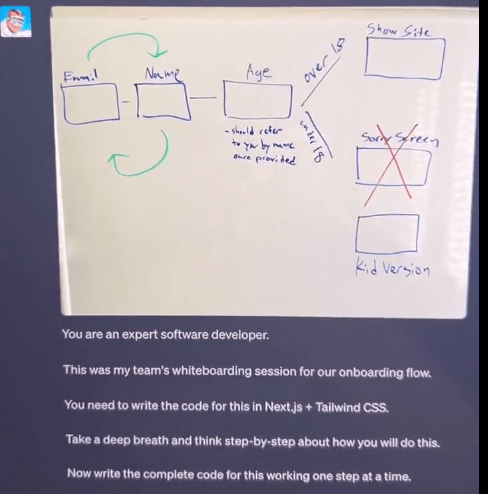

From whiteboard to editable notes:

You can give ChatGPT a picture of your team’s whiteboarding session and have it write the code for you. View Tweet -

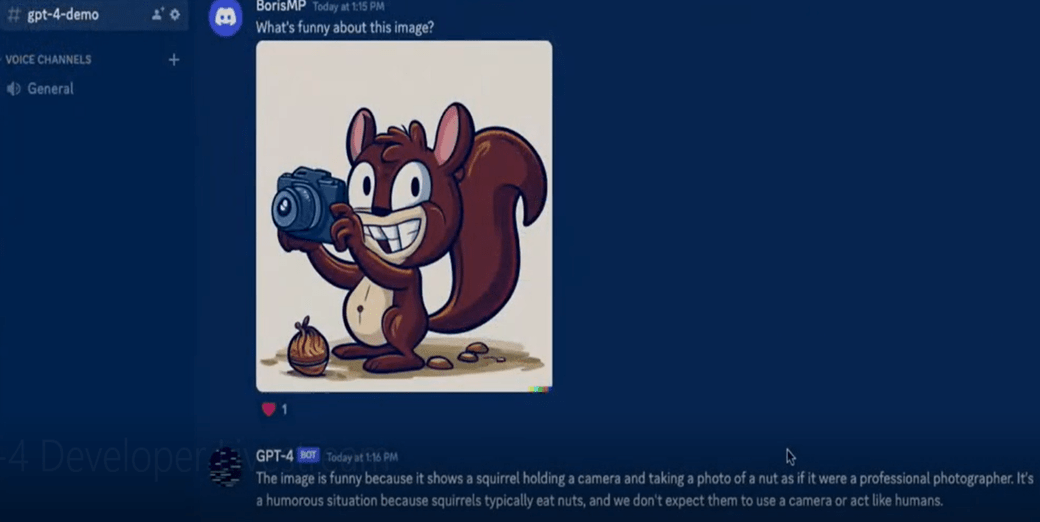

Why is it funny?

Fascinating and scary how quickly this is evolving. View Tweet

GPT-4 Turbo

OpenAI has launched GPT-4 Turbo, a high-powered model with a 128K context window. This innovation allows it to process the equivalent of over 300 pages of text in one go, enabling more complex and detailed interactions in applications.

Additionally, developers will benefit from its cost-effectiveness, as it is three times cheaper for input tokens and two times cheaper for output tokens compared to the previous version.

CONCLUSION

OpenAI’s GPT-4 language model is a significant milestone in the development of deep learning technology. Its advanced capabilities in problem-solving, creativity, collaboration, and the ability to process visual input and understand longer contexts make it a powerful tool for a wide range of applications.

GPT-4’s training was unprecedentedly stable and became OpenAI’s first large model whose training performance could be accurately predicted ahead of time. The performance of GPT-4 was improved by six months of iterative alignment, using lessons from the adversarial testing program, and ChatGPT.

GPT-4’s text input capability is available through ChatGPT and the API, while the image input capability is being developed with a single partner. OpenAI Evals, the framework for automated evaluation of AI model performance, is also open-sourced to help guide further improvements.

Despite its advancements, GPT-4 still has known limitations that OpenAI is working to address, such as social biases, hallucinations, and adversarial prompts. OpenAI encourages transparency, user education, and wider AI literacy to ensure responsible adoption of these models.

Overall, GPT-4 is a significant milestone in OpenAI’s efforts to scale up deep learning and empower everyone with advanced technologies. source

Embark on an AI adventure and discover new insights with these recommended articles that complement your interests: ChatGPT, GPT-3, InstructGPT, ChatGPT Whisper API, GPT-3 Vs InstructGPT, ChatGPT Plugins, ChatGPT Function Calling